Are large node module dependencies an issue?

The other day, I had some friends frowning over the 800KB size of a node application. This brought to my attention that I never really worried about the size of my dependencies in a Node.js application.

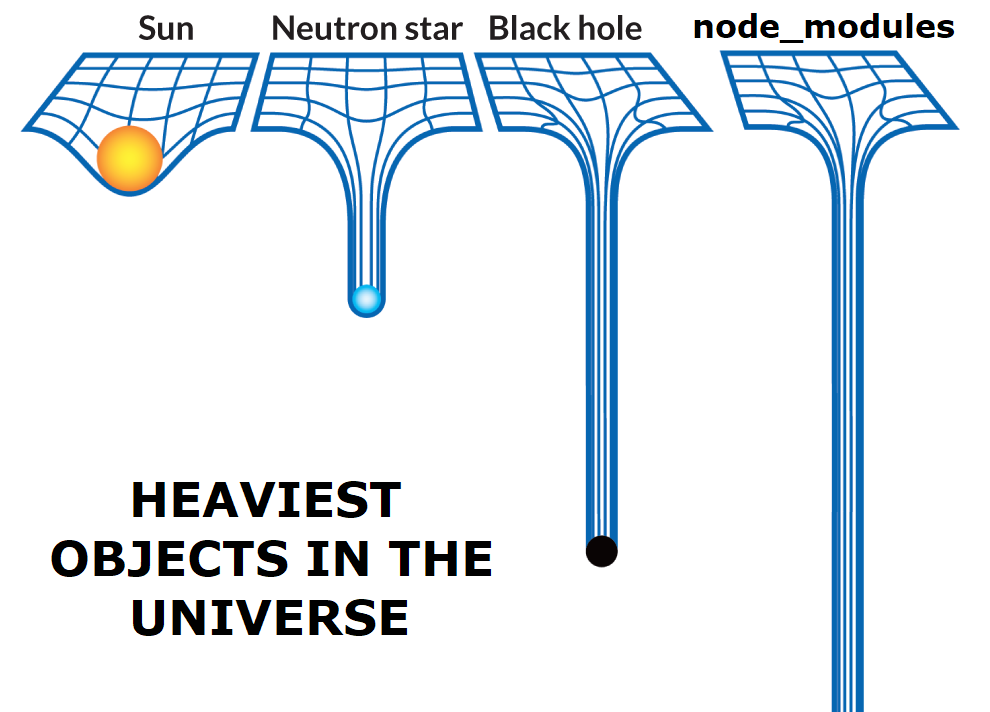

Which is odd, as I constantly worry about size when shipping JavaScript to the browser. Whereas in Node.js, the size of Node modules has become a meme by now. Many memes!

I used Node.js for tooling and web applications and never thought about the size of my modules. Now with me mostly doing Serverless (Lambdas, functions), I’m wondering if its implications have some impact on functions with some large dependencies chained to it.

So I set out on Twitter to ask the pros about their experience. Thanks to Nodeconf.eu and ScriptConf I have some connections to the Node and Serverless communities, and they provided me with all the insights.

The TLDR? It depends. For a "normal" Node application, it most likely isn't. It can be on Serverless, though!

This was the original question.

Node.js people: Has module size ever worried you in a production environment? Were there any significant performance drops when adding heavy libraries?

— Stefan Baumgartner (@ddprrt) June 19, 2020

Maybe the @nearform people (pinging @jasnell, @matteocollina, @addaleax) have some insights into that 😄

Brief tweets leave lots of room for details. So this question was way too generic to provide a simple yes or no. The issue can be much more varied and depends highly on people’s views:

- When we talk about large depdencies, what size are we talking about. When is something large?

- What causes an issue and what do we see as issues (start-up performance, run-time performance, stability?)

So there was a lot of room to fill. And the wonderful people from the community filled it with their insights. Thank you so much for helping me here!

Let’s target the question from three different angles:

Regular Node.js apps #

Matteo from Nearform never experienced any big trouble with big node modules. Especially not with regular Node.js apps.

I've never experienced any significant problem about this, even in serverless environments. The few times this has been a problem was solved by splitting said lambda/service into multiple small bits as not all the deps are needed everywhere.

— Matteo Collina (@matteocollina) June 19, 2020

Tim Perry has found some issues with CLI tools where he wants to be as responsive as possible. He used one of Vercel’s many Node.js tools to make it fast and swift. PKG creates executables for Windows, Mac and Linux that package the correct Node.js version with it.

Serverless #

When regular Node.js apps boot once and then run, Serverless functions boot once and then … die some time. Also, Serverless functions run in (Docker) containers that need to be booted as well. And even if everything is supposed to be fast, it isn’t as fast as running it on a server that understands Node.js or your local machine.

This is also what Franziska, who worked with the V8 team and is now with GCP, points out:

It's a problem for lamdba/functions. Just parsing large deps takes significant time.

— Franziska Hinkelmann, Ph.D. (@fhinkel) June 19, 2020

So what does significant mean? Mikhail Shilkov did some great research on that topic. He deployed three different versions of an app that does roughly the same (Hello World style), but with differently sized dependencies. One as-is, around 1KB, one with 14MB of dependencies, one with 35MB of dependencies.

On GCP, Azure and AWS cold start time rose significantly, with AWS being the fastest:

- The 1KB as-is version always started below 0.5 seconds

- Cold start of the 14MB version took between 1.5 seconds and 2.5 seconds

- Cold start of the 35MB version took between 3.3 seconds and 5.8 seconds

With other vendors, cold start can last up to 23 seconds. This is significant. Be sure to check out his article and the details of each provider! Big shout-out to Simona Cotin for pointing me to this one!

James from Nearform seconds this opinion and points to some work from Anna (who works for Nearform on Node) to possibly enable V8 snapshots for this.

The DevOps view #

Frederic, Sebastian, and Marvin all point to the cost of CI build time and docker image size.

+ 1 to Docker image size, CI build time and slow startup which has already been mentioned.

— Sebastian Gierlinger (@sebgie) June 19, 2020

There is also a deployment package size limit of 50 MB (zipped), 250 MB (unzipped) on AWS Lambda (https://t.co/TA5x2jHozm). Using rollup/ncc can save your deployment in this case.

Which is definitely an issue. Especially if you pay for the minute in your CI environment.

Frederic also found the best way to close this round-up:

With serverless, this should't be too much of a problem. If your single-purpose function requires *a lot* of dependencies to get the job done, you're probably doing something wrong and should reconsider the scope of it.

— Frederic'); DROP TABLE tweets;-- (@fhemberger) June 19, 2020